Epoch Al 就与 OpenAI 合作的透明度问题致歉

刚刚来自 Epoch Al 的 Tamay 就其与 OpenAI 合作的 FrontierMath 项目透明度问题发表声明,承认在与 OpenAI 的合作中存在沟通和透明度方面的失误,并承诺未来将改进

FrontierMath (目前最难的数学测试,陶哲轩是其委员会委员)是一个旨在评估前沿数学模型能力的基准测试项目。Tamay 在声明中表示,Epoch Al 在与 OpenAI 的合作过程中,未能就 OpenAI 的参与程度向基准测试的贡献者,尤其是数学家们,进行充分的透明沟通

具体来说,Tamay 指出以下几个错误:

-

1. 披露时间过晚: 由于合同限制,Epoch Al 直到 FrontierMath 的第三次迭代(o3)发布前后才被允许披露与 OpenAI 的合作关系。Tamay 承认,他们应该在与 OpenAI 的谈判中更强硬地争取尽早向贡献者披露合作信息的权利

-

2. 沟通不一致: 虽然 Epoch Al 向部分数学家透露了他们获得了实验室的资助,但并未系统地向所有参与者说明这一情况,也没有明确指出合作的实验室是 OpenAI。这种不一致的沟通是一个错误

-

3. 未将透明度作为合作的前提: Tamay 表示,即使受到合同限制,他们也应该将与贡献者的透明度作为与 OpenAI 达成协议的不可协商的一部分。数学家们理应知道谁可能会访问他们的工作成果

针对数据使用问题,Tamay 承认 OpenAI 确实可以访问 FrontierMath 的大部分问题和解决方案,但有一个未被 OpenAI 看到的保留集,用于独立验证模型能力。他同时强调,双方有口头协议,这些材料不会被用于模型训练

Tamay 指出,OpenAI 的相关员工在公开场合将 FrontierMath 描述为“强保留”的评估集,这与他们的理解一致。他进一步强调,保持真正未受污染的测试集对实验室大有裨益

此外,Tamay 还提到,OpenAI 完全支持 Epoch Al 维护一个单独的、未被看到的保留集,作为防止过拟合和确保准确衡量模型进步的额外保障。FrontierMath 从一开始就被设计并呈现为一个评估工具,这些安排也体现了这一目的

英文全文:

Tamay

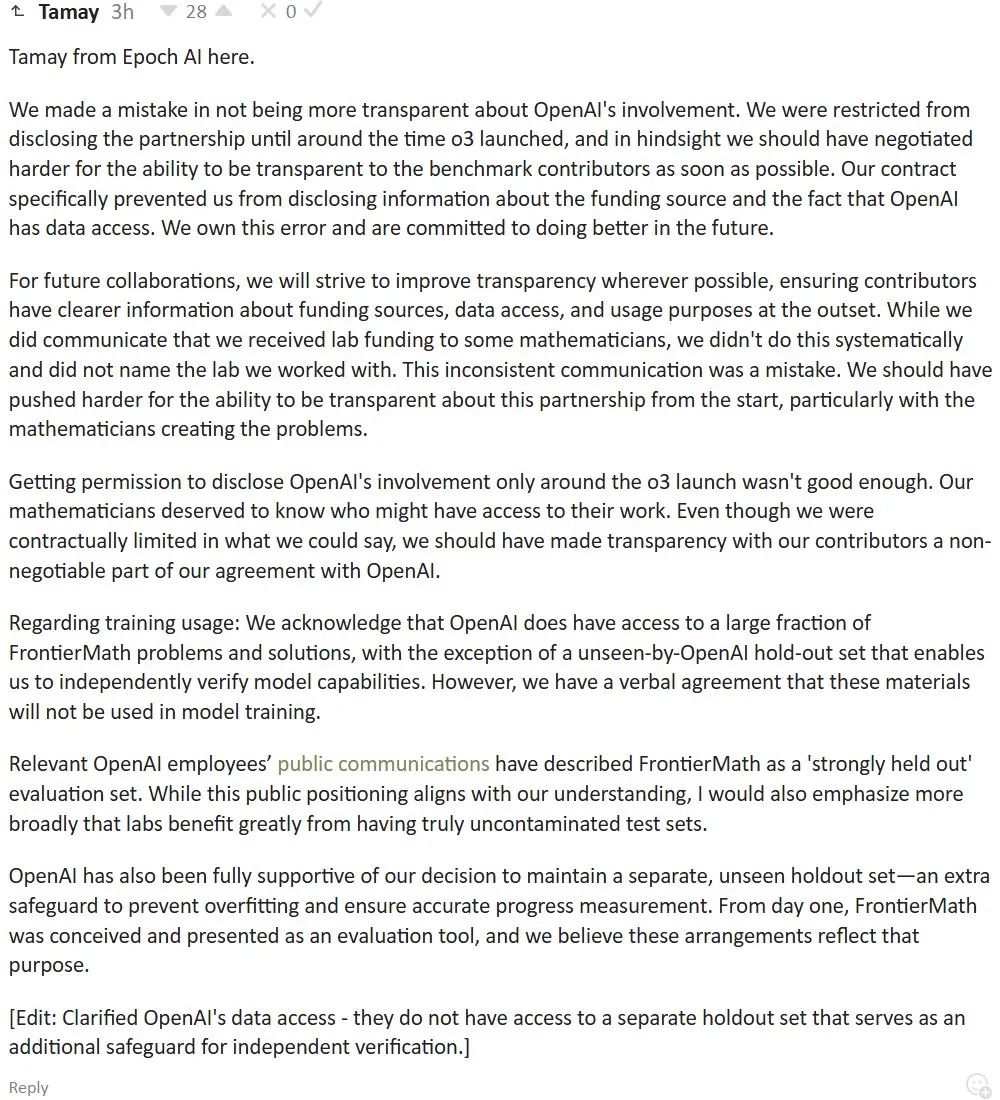

Tamay from Epoch Al here.

We made a mistake in not being more transparent about OpenAl’s involvement. We were restricted from disclosing the partnership until around the time o3 launched, and in hindsight we should have negotiated harder for the ability to be transparent to the benchmark contributors as soon as possible. Our contract specifically prevented us from disclosing information about the funding source and the fact that OpenAl has data access. We own this error and are committed to doing better in the future.

For future collaborations, we will strive to improve transparency wherever possible, ensuring contributors have clearer information about funding sources, data access, and usage purposes at the outset. While we did communicate that we received lab funding to some mathematicians, we didn’t do this systematically and did not name the lab we worked with. This inconsistent communication was a mistake. We should have pushed harder for the ability to be transparent about this partnership from the start, particularly with the mathematicians creating the problems.

Getting permission to disclose OpenAl’s involvement only around the o3 launch wasn’t good enough. Our mathematicians deserved to know who might have access to their work. Even though we were contractually limited in what we could say, we should have made transparency with our contributors a nonnegotiable part of our agreement with OpenAl.

Regarding training usage: We acknowledge that OpenAl does have access to a large fraction of FrontierMath problems and solutions, with the exception of a unseen-by-OpenAl hold-out set that enables us to independently verify model capabilities. However, we have a verbal agreement that these materials will not be used in model training.

Relevant OpenAl employees’ public communications have described FrontierMath as a ‘strongly held out’ evaluation set. While this public positioning aligns with our understanding, I would also emphasize more broadly that labs benefit greatly from having truly uncontaminated test sets.

OpenAl has also been fully supportive of our decision to maintain a separate, unseen holdout set—an extra safeguard to prevent overfitting and ensure accurate progress measurement. From day one, FrontierMath was conceived and presented as an evaluation tool, and we believe these arrangements reflect that purpose.

[Edit: Clarified OpenAl’s data access – they do not have access to a separate holdout set that serves as an additional safeguard for independent verification.]

⭐

(文:AI寒武纪)