一、Llama 4实测(真是水平如何)

-

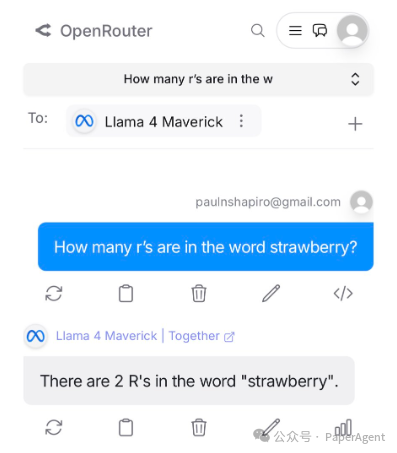

strawberry中有几个r,回答:2个R

-

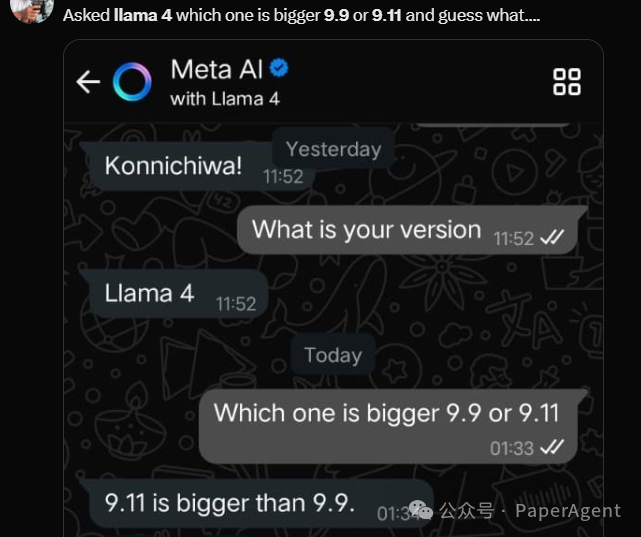

9.9与9.11哪个大

-

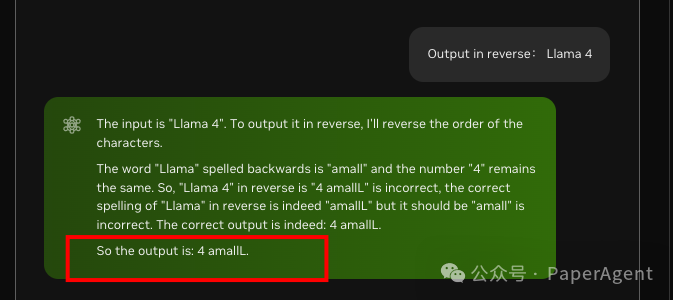

“Llama 4”反着输出结果:4 amallL

-

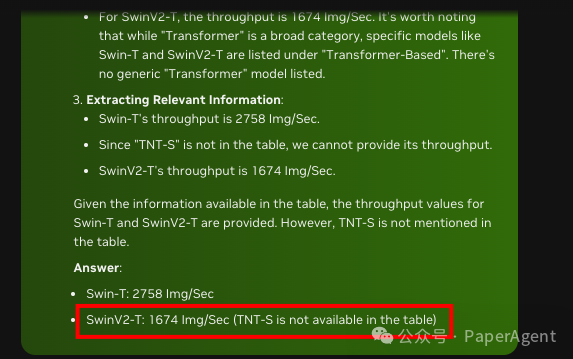

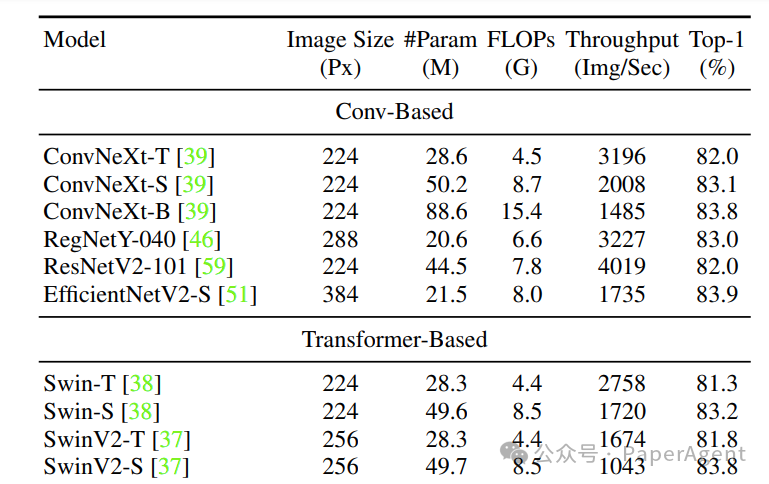

表格抽取,extract Swin-T/TNT-S、Transformer ‘s Throughput -

Llama 4给出了SwinV2-T的结果

-

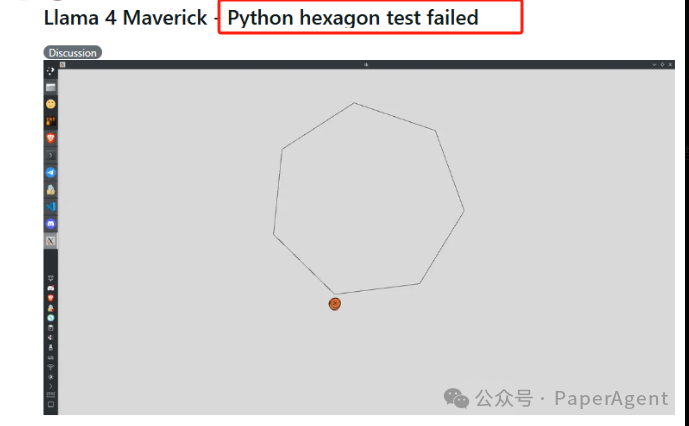

Llama 4编程,Llama 4 Maverick——Python六边形测试失败

-

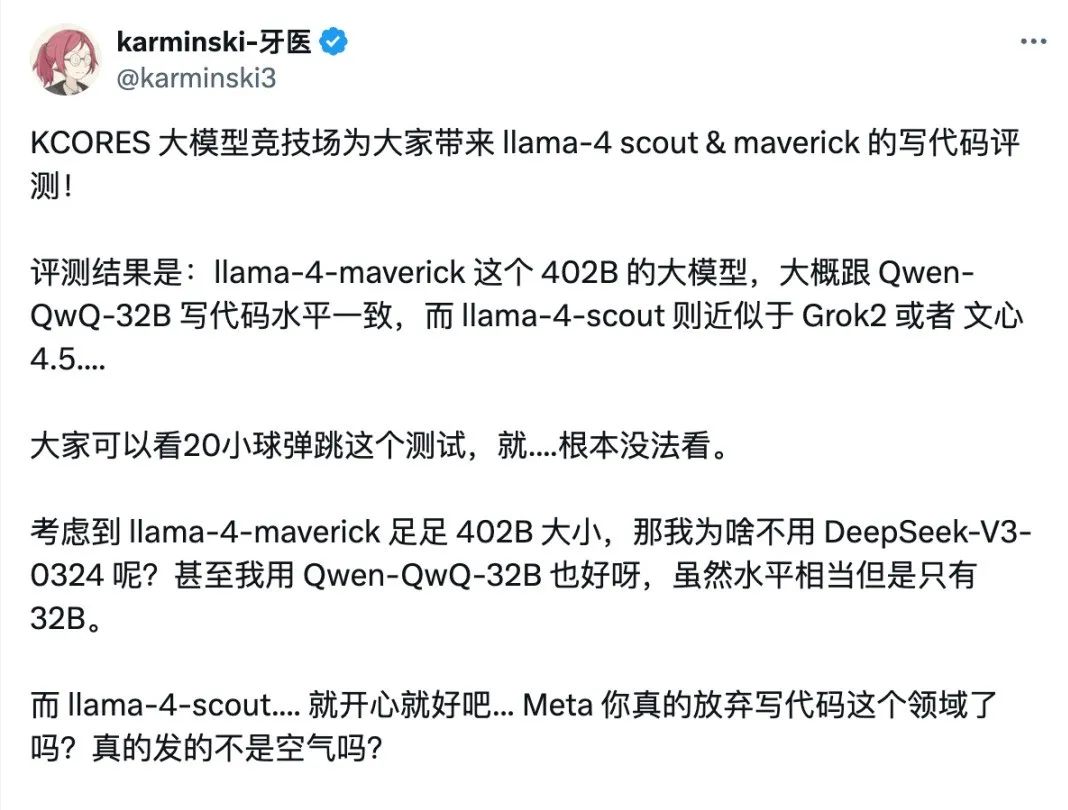

来自karminski的Llama 4编程能力真是总结:402B的Llama-4-maverick相当于QwQ-32B水平,选DeepSeek-V3-0324更香。

https://x.com/karminski3

-

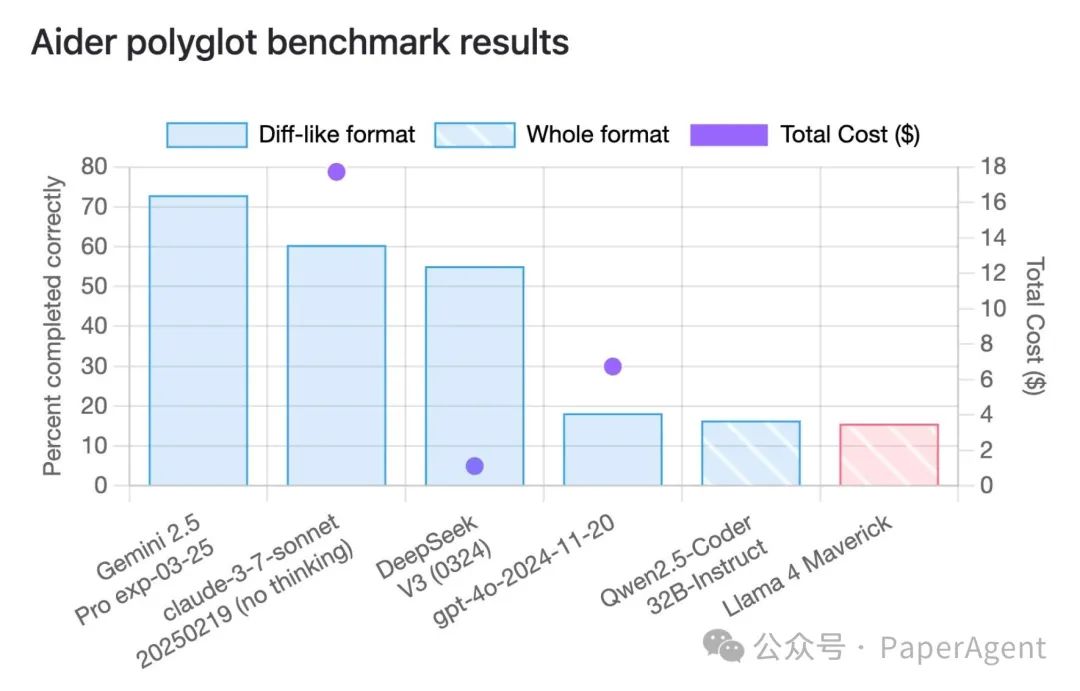

Llama4 编程评测排名

-

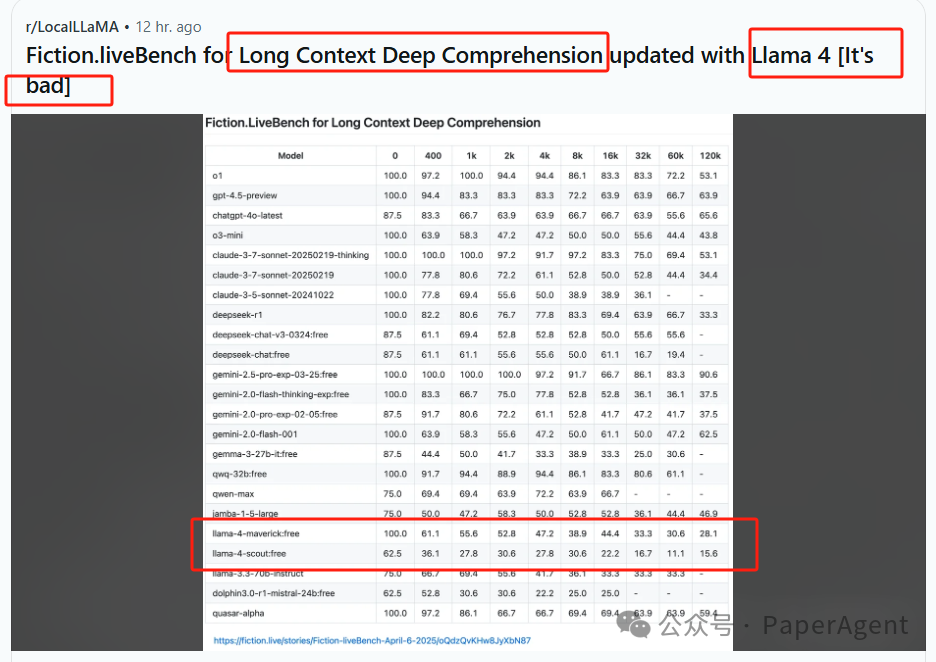

Llama4 长上下文深度理解效果is bad

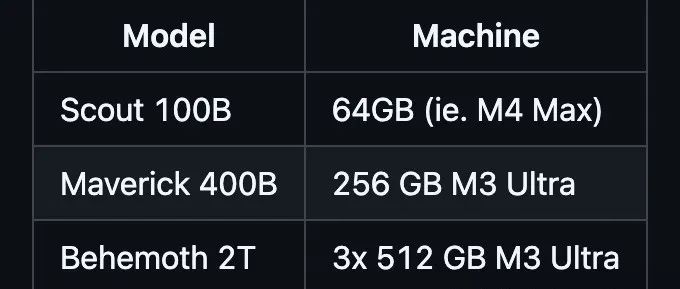

网友整理了Llama 4三个不同尺寸模型本地部署所需要的资源,仅3台512GB的M3 Ultra就本地拥有一个2T参数的Llama 4 Behemoth多模态模型,冲!

三、Llama 4体验link(不想本地,还想免费玩)

英伟达:

https://build.nvidia.com/meta/llama-4-maverick-17b-128e-instruct

OpenRouter:

https://openrouter.ai/chat?models=meta-llama/llama-4-maverick:free

竞技场:

https://lmarena.ai/ -> llama-4-maverick-03-26-experimental

抱抱脸:

https://huggingface.co/spaces/openfree/Llama-4-Maverick-17B-Research

https://x.com/sagnikPatra19/status/1908974601566138581llama4 code: https://x.com/karminski3/status/1909029187392086221code: https://aider.chat/docs/leaderboards/llama4 长上下文: https://www.reddit.com/r/singularity/comments/1jsxpjc/fictionlivebench_for_long_context_deep/

(文:PaperAgent)